Launch companies, satellite operators, and mega-constellations being a threat to the industry with remarkable future potential for market disruption – we covered all of this in our previous articles, but there’s still something missing.

Diving into the Space-Data as-a-Service business model, we went from launch to sending equipment into LEO. And yet, what goes up must come down, and things do not stop once a satellite is active: it’s in the time between launch, commissioning, and eventual de-orbiting where the magic happens.

That magic is called space data. This isn’t just the coveted buzzword around which our society functions, but it is also the core of why we tackled this new, disruptive topic of the space industry.

How to get data: ground station (as-a-Service)

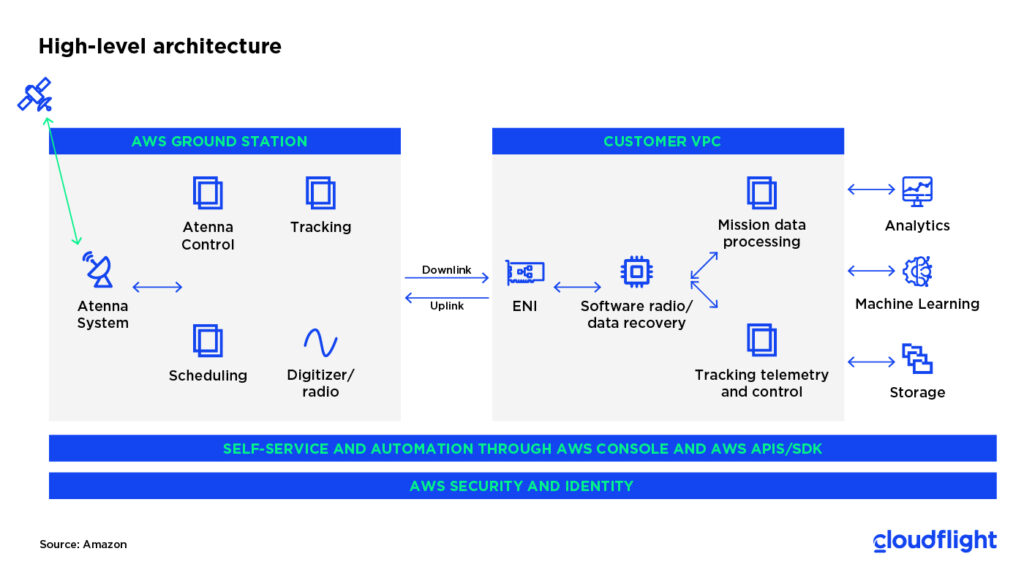

Satellites have only limited capabilities to store and process recorded data on-board: no matter how advanced, they are part of a much larger system in which they have to be in direct contact with a ground station to download their data in real-time. Satellite Ground Stations (SGS) are built for collecting and streaming remote sensing satellite data that is then provided to a variety of users and applications.

These stations, which often are located around the planet’s poles due to polar-orbiting satellites having the most overpasses around these areas, send radio signals to the satellite (uplink), e.g. spacecraft commands, new collection tasks, and receive data transmissions from the satellite (downlink). In some cases, they also serve as command and control centers for the satellite network. From the ground station, data can be analyzed or relayed to another location for analysis. The altitude, the movement, and the attitude of the satellite and information about its critical systems can be monitored, and satellites can be controlled by decision-makers.

Ground stations, however, are quite expensive to build, operate and maintain. Furthermore, historically it has always been necessary to negotiate with the individual ground stations to get a communication slot assigned – here is where the as-a-Service business model comes into play. Introduced by AWS, K-Sat and Azure after their first entry in the market as space service providers, the concept of Ground Station as a Service (GSaaS) is simple: customers operating satellites or sensors in LEO can connect to them and gather the data at a lower cost. Besides a significant cut in costs and requirements, there are multiple benefits to this business model:

- Customers can concentrate on building the satellite and the application layer, knowing ground station services are already available for use.

- Customers have access to managed ground segments with global stations and network footprint. This would be the equivalent of building a multi-million-dollar infrastructure.

- No long-term commitment is required: there is a simple, pay-as-you-go model in place, usually per minute. Similar to other cloud services, it allows companies to run on a full OPEX model (excluding the cost of the satellites).

- Self-service scheduling, via APIs or console.

- First-come, first-serve scheduling.

As we’ve done previously in these series, we interviewed a key player in the field and asked Leaf Space their opinion on the rise of the as-a-Service business model and, in particular, on Ground Stations:

Storing, processing & handling data

Data management handles the data flow from acquisition, processing and archiving to user access of the payload data in the ground segment of an Earth observation mission. It ensures a smooth flow of orders and requests throughout the system and provides monitoring and reporting functionalities.

Satellites provide important data that, for example, allows the rapid detection of changes to the environment and climate – this means, however, that the produced amounts of data are massive. In the case of the EU Copernicus Program, the satellites’ high-resolution instruments currently generate approximately 20 terabytes of data every day. New ideas and concepts are needed in order to be able to process data and turn it into information. Artificial Intelligence plays a major role in this, as such processes are extremely powerful, especially where large amounts of data are involved.

Suitable storage and intelligent access options are required in order to handle and evaluate these large quantities of data. In the last years, public and private companies developed platforms for the Exploitation of Earth Observation (EO) data in order to foster the usage of EO data and expand the market of EO-derived information. This domain is composed of platform providers, service providers who use the platform to deliver a service to their users, and data providers.

Technologies such as Big Data and cloud computing, enable the creation of web-accessible data exploitation platforms, which offer scientists and application developers the means to access and use EO data in a quick and cost-effective way. In this context, Exploitation Platforms (EP) are virtual workspaces that provide user communities with access to large volumes of data, algorithm development and integration environment, processing software and services, computing resources, and collaboration tools.

Solutions like the multi-cloud EO data processing platform provide the technology to integrate ICT resources and EO data from different vendors in a single platform. In particular, it offers multi-cloud data discovery, multi-cloud data management and access and multi-cloud application deployment. This platform has been demonstrated with the EGI Federated Cloud, Innovation Platform Testbed Poland, and the Amazon Web Services cloud.

Data transformation

Data transformation is the process of changing the format, structure, or values of data. Processes such as data integration, data migration, data warehousing, and data wrangling all may involve data transformation. From organizing it better so it is easier to use for both humans and machines to improving data quality and facilitating compatibility between applications, systems, and types of data, data is transformed for many reasons.

Data transformation can increase the efficiency of analytic and business processes, enabling better, data-driven decision-making. Here are some of the functions data transformation performs in the data analytics stack:

- Extraction and parsing

- Translation and mapping

- Filtering, aggregation, and summarization

- Enrichment and imputation

- Indexing and ordering

- Anonymization and encryption

- Modeling, typecasting, formatting, and renaming

What to do with data: our role

As mentioned, satellite instruments generate large amounts of data – 24/7 over their entire lifetime, to be precise, and both accessing and storing it are a challenge in and of itself. Even after the data has been processed, it is not over: the results need to be scientifically validated. This requires manual steps which can only be done with the expertise of a scientist.

Processing data and getting results from it is something we already do through our in-house Machine Learning platform, for example, and an even more relevant example here may be GRASP. We play a role here by supporting their scientists in the improvement of their algorithm. We provide the GRASP team with a cloud-based development environment in which they can easily run GRASP on our data archive and evaluate the generated results using a tailor-made framework.

Archive here is the keyword. No matter its amount or its origin, data is essentially useless if, even after processing, there is no way of getting easy access to it and categorizing it. It is, in fact, only after this is done that it can be utilized, repurposed, and exploited to serve current industries, create new business models and explore new planets. One day, we may even use data to venture deeper into outer space to discover what’s next for our species.

When it comes to data usage or another partner we are developing the near-real-time streaming and processing platform to process thousands of positional updates per second – pushing data out to customers via streaming endpoints and providing historic insights into the last couple of years. Again, one of the biggest challenges is the vast amount of data that needs to be dealt with. Collecting and storing all of that can easily reach tens to hundreds of billions of single data points. However, the customer in the end just wants to have easy, non-interrupted, and reliable access to build their own business cases on top of it. Making sure that this happens from the point in time when data arrives from space until it leaves any data center (cloud or on-premise) is essential.

To find out more about our approach to the NewSpace industry and our view of its future, join our GetInspired Event: together with AAC Clyde Space, we will discuss the Space Data as a Service business model, AI, and much more.

Cloud Based Earth Observation Data Exploitation Platforms – https://ui.adsabs.harvard.edu/abs/2017AGUFMIN21F..03R/abstract