There is currently quite a lot of discussion about the legality and the regulation of face recognition, especially in the EU. This post is not about that. Instead I want to give a short insight about the technological background of face recognition, how it works and how the same technology is being used outside the context of face recognition. So if you want to gain some background information in order to build your own opinion on this controversial topic – read on!

It’s not one simple thing!

Face recognition is not a single technology, instead it is a mix of many algorithms that work in conjunction, each of which is used extensively outside of face recognition.

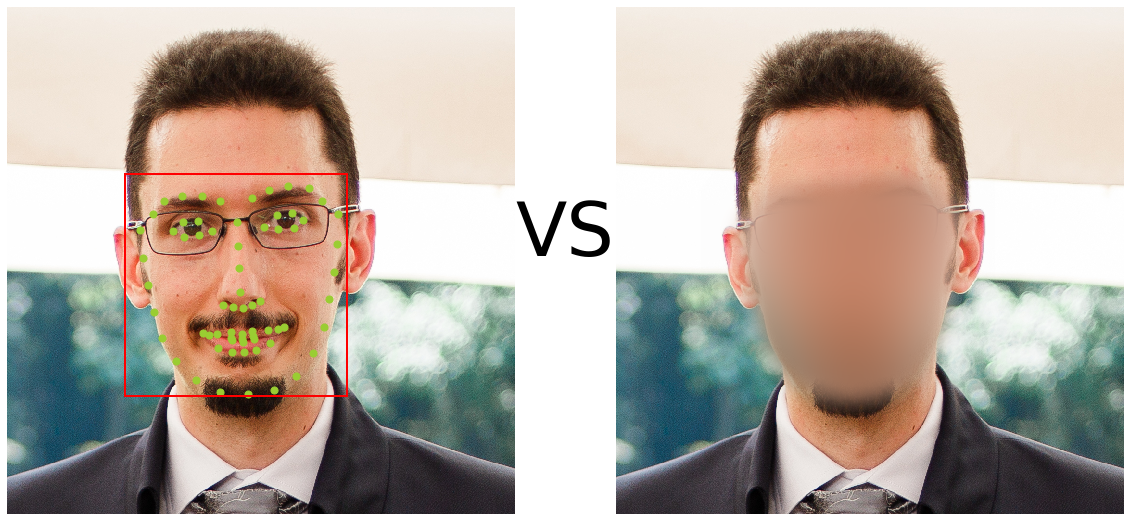

Face Detection

This set of algorithms – in the general case called ‘object detectors’ – is responsible for actually finding one or more faces in an image. So technically they take an image as input and result in the coordinates of faces (or other objects) in the image.

Face Tracking

Given that we now know where a face is in an image, the next challenge is to track this face over time. Meaning that if we know the coordinates of a face at a certain time point in a video, we would like to know where it was in the past. Why? Object tracking algorithms (of which face tracking is a subset) are generally faster than object detection algorithms, so even if we do not have the computational resources to detect faces and recognize them at every single time point, we can still track them once we know they are there.

Facial Landmark Detection

After having found and tracked all faces, our next task is to find individual face landmarks in the faces. What does this mean? We “simply” find the locations of the eyes, the nose, the mouth and so on. Technically this is commonly done using a machine learning concept called regression.

This knowledge is then used to normalize the image. This means the image is transformed so that e.g. the eyes and the nose are always at the same location – which makes the next steps much easier and actually enables to achieve reasonable performance at all.

Facial Embedding

This is the actual centerpiece of each face recognition system but also the hardest to grasp. This algorithm takes a normalized image and produces a more or less random set of numbers (commonly 128 numbers). These numbers are per se quite meaningless but they have an interesting property: If we get the numbers of two pictures of the same person then these numbers will be similar; if we get them from two different persons they will be (hopefully) not similar.

How these numbers are generated is out of scope for this post, interesting for now is only how they are used.

Facial Recognition

During an enrollment phase, these numbers for a set of persons are computed and stored in a database; during inference each currently visible face is compared against this database, allowing one to compute the similarity to the enrolled persons.

With that in mind it is quite simple to see the difference between verification and identification.

Verification is the much simpler case where one compares the currently visible face (and thus the current set of numbers) to a single entry in the database, thus answering the question “Is Person A really currently in front of the camera?”.

Identification, on the other hand, is answering the question “Who is the person currently in front of the camera?”. In order to answer that, the current set of numbers have to be compared to each and every set of numbers in a potentially large face database.

Face Recognition Outside Surveillance

Face detection and tracking can be (and are indeed) used for the fully automatic redaction (i.e. anonymization) of images and videos – see Google Streetview for example.

Facial landmark detection is used for example in the smile detection in modern cameras, or the “closed eyes” detection of photo management software.

Even face recognition can have practical applications outside of surveillance: tagging of persons in personal photo collections or automations in smart home applications.

Outside Face Recognition

Each building block of a face recognition system has a lot of applications outside of the face recognition use case – and in most cases is actually primarily being used outside of face recognition.

Object detection and tracking form the basis of a variety of industrial applications (like production line monitoring, vision based QA, …), infrastructure installation (e.g. traffic analysis) and even are a key safety feature in self driving cars (e.g. pedestrian detection and avoidance, traffic sign recognition, …).

Embedding networks are used in applications like natural language processing tools (NLP), in recommendation engines, anomaly detection tools or as a preprocessing step in various visualizations in data science applications.

Face Recognition at Cloudflight

We developed a variety of face recognition applications over the last couple of years, both for internal use and for our customers – none of which are in the context of surveillance.

Examples are automatic live video redaction and *anonymization systems* that ensure the privacy of individuals when recording videos in public spaces or smart signs scattered around our office that display personalized information depending on the individual in front of them.

As with many other artificial intelligence applications, facial recognition systems have a wide range of use cases *outside of the obvious* – and often times solely discussed – surveillance application.

We organize ‘Get inspired’ events as well as create blog entries regularly in order to give an overview about our portfolio and experiences. Let us inspire you – check out the event section on our website and visit us in our offices. Throughout our articles and events, we would like to present to you our one brand: Who we are, how we think outside of the box, how we think in our Cloudflight-way and which opportunities open up. Follow us online and on-site!